(2/17/2026) Latest: This finetune series probably will not be updated.

Anima is a wonderful model. but it has a very restrictive license.

I'm fine with dual licenses (non-commercial + commercial). We all know that training a model needs lots of $. Commercial license is necessary. Commercial means $, $ means better model.

I didn't expect that they keep the right to "sell" your "non-commercial Derivatives". You don't even have the right to make your "non-commercial Derivatives" non-commercial (copy-left). Because they keep the right to apply their commercial license to your "non-commercial Derivatives".

Personal opinion, that's a little bit greedy. Unfortunately, too restrictive for my personal situation.

So, this model will not be further finetuned.

Many models are coming up. It's still too early to say who is the best.

E.g Chroma2. Which should be Apache 2.0. And is based on klein 4b. Much better than cosmos pt2.

https://huggingface.co/lodestones/Chroma2-Kaleidoscope

Back to topic, v0.12fd update note:

Better (?) stability and details.

RDBT [Anima]

Finetuned circlestone-labs/Anima. Experimental, but works

Dataset contains natural language captions from Gemini. But still contains danbooru tags. Every image in dataset is handpicked by me. Contains common enhancement such as clothes, hands, backgrounds.

You must specify styles in your prompt.

Always use LoRA strength 1 on the checkpoint this LoRA based on. Unless you know what you are doing.

Models are CFG distilled:

Prefer Euler a sampler.

Use CFG scale 1 to gen 2x faster.

Use CFG scale 1~2 to get probably better image.

Model bias might be amplified. Default style that do not need trigger words (it is bias) might be stronger. E.g. Styles from style LoRA. Styles that need trigger (not bias) might be weaker. E.g. base model built-in styles.

Why LoRA?

I only have ~20k images. A LoRA is enough.

I can save VRAM when training and you can save 98% storage and data usage when downloading.

Why CFG distilled?

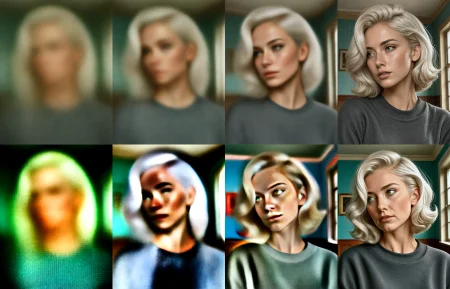

This is what sampling process looks like. Up: rdbt, bottom: cfg 4. You can find more examples and workflow in cover images.

Advanced usage: mixed sampling settings

Probably only doable in ComfyUI. You can use distilled model to sample early steps (e.g 0-20%) and use normal model to sample rest of steps. In this way, you get a stable image composition (low level signal), white won't loss styles (high level signal)

See cover images how to connect multiple "ksample advanced" nodes together.

Versions

f = finetuned

d = cfg distilled.

Based on anima preview:

(2/19/2026) v0.12fg: Better stability and details.

(2/12/2026) v0.6d: CFG distilled only. No finetuning. Cover images are using Animeyume v0.1.

(2/3/2026) v0.2fd: finetuning + cfg distillation. Speedrun attempt, mainly for testing the training script. Limited training dataset. Only covered "1 person" images plus a little bit of "furry". But it works, and way better than what I expected.