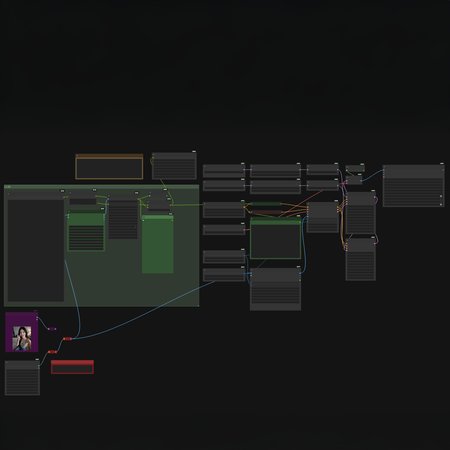

This workflow is designed to generate training video datasets for Wan LoRA training using AI Toolkit, not for showcase or cinematic video generation.

It uses Painter I2V to add minimal, natural motion to still images, producing short video clips suitable for LoRA training.

The workflow supports batch processing and automatically generates caption text files via LM Studio (local VLM).

Caption generation requires LM Studio running locally with a compatible vision-language model.

Model selection and LM Studio setup are outside the scope of this workflow.

Images are resized in two stages: first to reduce VLM load during captioning, then fixed to 512×768, which is the recommended video resolution for AI Toolkit training.

⚠️ VRAM usage is very high. Due to Wan2.2 I2V, Painter I2V, and dual noise sampling, even 4Q GGUF models can push an RTX 5090 close to its VRAM limits.

Description

Initial release of the AI Toolkit workflow for generating Wan LoRA training video datasets.

Designed for dataset creation and experimentation.