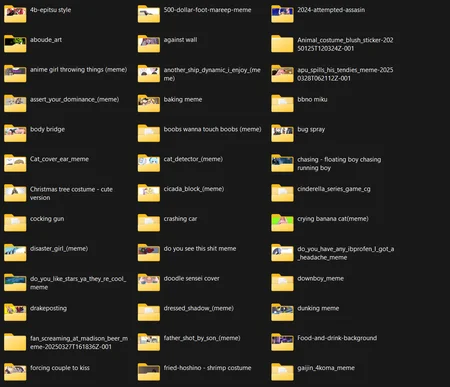

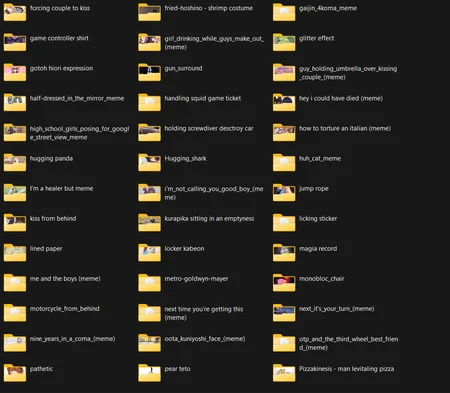

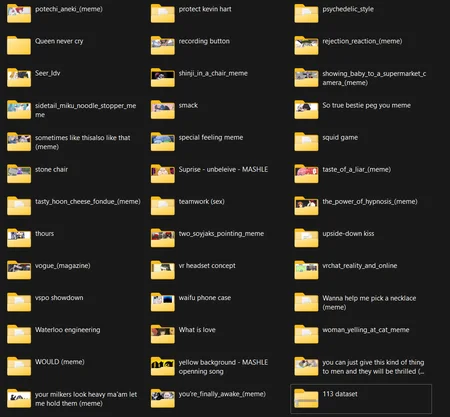

All of (most of) datasets of my current untrained and failed to train LoRAs.

Unfortunately some of my datasets were gone when I deleted the WebUI A1111, I saved most of my training under the same folder :D

I do not keep the tagging files for those, I believe that everyone has their different method of tagging and treating the image quality.

I include some of my opinions into some of datasets.

Somehow you will get more images for meme when scraping it in Danbooru.

My behavior of training settings:

LR: 3e-4 to 5e-4

TE: 5e-5 to 6e-5

min_snr_gamma: 7-8 (disable when training on Noob)

ip_noise_gamma: 0.05

optimizer: AdamW8bit or AdaFactor

For concepts with less than 2 people, network_dim: 8-16. Concepts with higher varieties and 2 more people will use higher dim rank, from 16-32.

LoRAs with text will use Prodigy when text is longer than 10 letters. AdamW8bit works fine but may need to adjust the batch size and repeat, until you get the good recipe. Higher dim rank may work well for longer text, but in exchange of learning more unnecessary details.

Illustrious 0.1 is bad at understand these letters: W, P, U, D, L, no matter how you try to increase more epoch, repeat, or batch size. NoobVpred learns text better, it learns from left to right and more consistent overtime, but usually struggles the last words, until you get enough steps.

Style LoRAs tends to give bad result for me when adjust dim rank lower than 32.

For epoch and number of repeat, i just make random number base on my understanding on the checkpoint I'm using for the training.

Ex: Assume I train a meme LoRA that include some know poses in the model, I'll adjust the repeat and epoch that will give me some total steps between 500-1000. Usually, model will understand the concepts after 300 steps, and continue to be consistent. This is just based on experience with LoRA I train on, so it is not a fact, just an observation opinion.

I'm gonna write an article about this later when I train some LoRAs and take the screenshots of the images it generate over different epoch. :D

Thanks for the support from everyone on this site.